My CogX Day 1 Highlights - 26.09.23.

5 talks from CogX that will expand your AI knowledge

Wins my award for - most influential

Summary - Tristan Harris (an American technology ethicist) commanded the main stage with equal doses of integrity and determination. He comes across as genuine in the way that he describes taking the AI Safety fight to leaders of US big tech by day before setting down swords and having a pleasant evening meal. You see, his fight is not against his good friends but against the organisations that they represent. Perhaps the soft power he has is his primary weapon.

Tristan explains that we are living in a time of second contact - the creation AI era. The first contact being the Social Media era - humanity’s initial encounter with AI.

Today we are witnessing a race to deploy impressive new capabilities as fast as possible (e.g. DALL.E 3). This is not a safe race. We have exponential misinformation, fraud, crime, mass institutional overwhelm.

If nobody wants all of this harm - how can we fix it? Generative AI has emergent capabilities that their programmers can’t control. Just think about that. How can you govern what you can’t control!? Organisations are progressing so quickly, even the builders can’t understand and predict. A tech columnist used Generative AI within Snapchat and pretended to be a 13 year old. The results were terrifying.

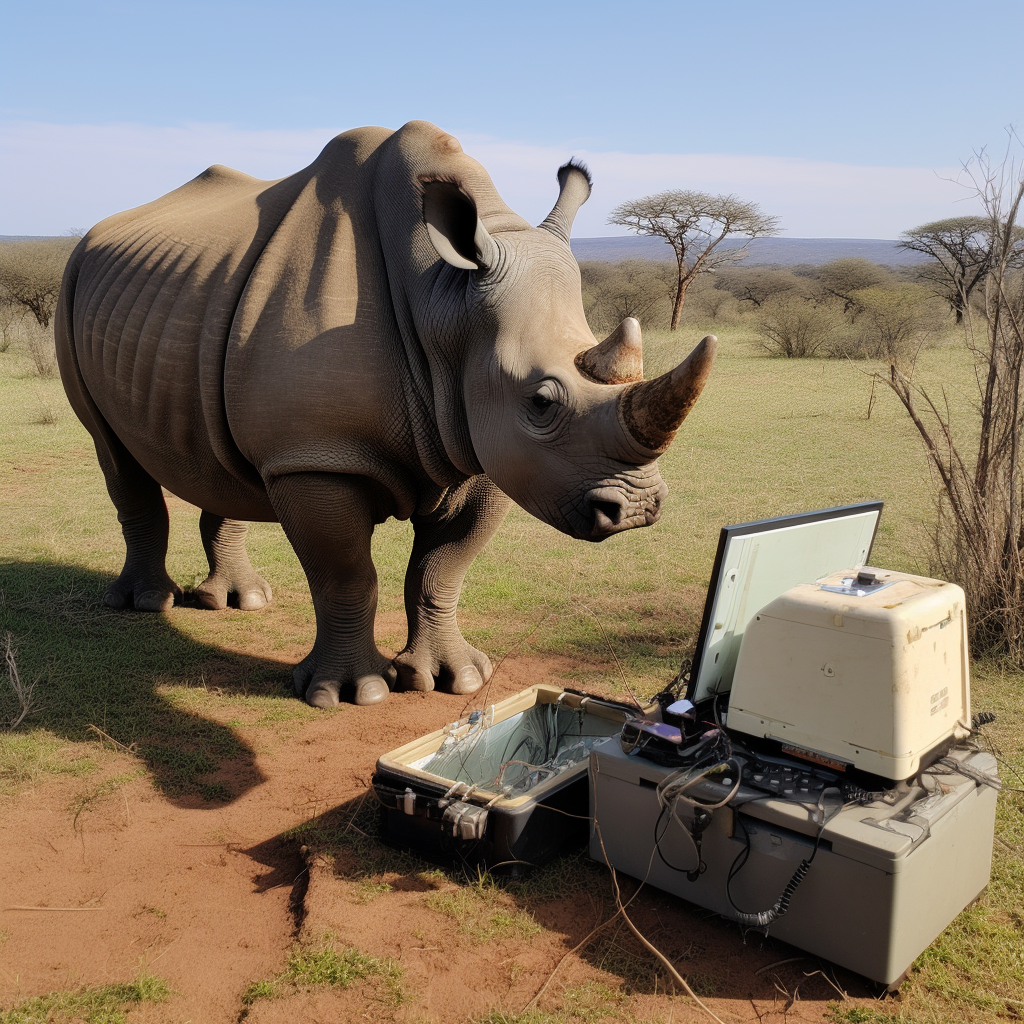

The ratio of AI builders vs AI Safety researchers is 30:1. If you set up a Game Reserve with the same poacher:keeper ratio then the poachers would make a lot of money and the animals would probably be breaking out and running as free as the generative algorithms are right now.

Tristan says that we can choose the future we want:

Do we care about not repeating the same mistakes?

What can you personally do to influence this path?

Tristan’s solution:

Coordinate the race - internationally.

Emergency brakes - right now there is no plan to stop emergent capabilities. Homer Simpson can smash the glass in the nuclear power plant, but there is no red button to push right now. Doh!

Limits on Open-source - Llama 2 is very dangerous. It took one engineer in Tristan’s team $100 to take off the safety controls. They then received a response when asking ‘how to make Anthrax?’

Liability - if organisation leaders are personally liable, this will automatically slow down the race.

Mitigation strategies. Watermarking etc.

Why this matters - In the last 5 years AI Safety has gone from not even on the CTO’s radar to one of the top 5 issues for most CEOs. This is wonderful progress! My former CEO just made this point in UK Media. The UK Government are at the forefront of the international coordination.

In a previous blog I have written about the importance of learning from the algorithmic lessons of the past. I remain optimistic that the UK AI Safety summit can set us on a different path.

What can you do for your company? This film initially depressed Ronald Reagan before motivating him to push forward with the Reykjavik Summit. What is your Reykjavik when it comes to AI Safety? Don’t just think about it - perhaps have a chat with your boss or employees about your hopes for the future of Generative AI. People often tell me that AI Safety accountability sits squarely with the technical folk (the AI builders). I actually think that we make the most tangible progress in AI Safety if every non-technical employee takes proactive steps to understand what needs to change in their day job.

Talk 2 - Weaviate - Bob van Luijt

Wins my award for - most energetic

Summary - Bob van Luijt (CEO) gave an energetic performance with no visual aids. He was adept at the illumination of complex topics with palatable examples.

When it comes to natural language, such as a relationship between dog and bone (compared to cat and bone), we map out relationships between words in space (3D). We place the word bone closer to dog than cat. These are called embeddings. A vector is the representation that comes out of the model. We can predict these representations. More dimensions means more context.

Semantic search came along in 2012. It relates to how a search engine attempts to decipher the meaning behind any given search based on intent, context, and the relationship between words (e.g. Big Ben is associated with London). Before, we needed a keyword match. Now when a user searches ‘landmarks in the UK’ - in this space I have a data object ‘Big Ben is in London.’

When attention is all you need was published in 2017, Weaviate started to understand what Google meant by AI-First. The quality of sentence embeddings ‘went up like crazy.’ Google were vectorising search.

In the Generative AI era, the use case has moved from search to generation. What is possible with Generative models plus Vectorisation models? Fine-tuning is expensive and takes a long time, is there another way?

Vector databases can make AI models stateful! (retrieval augmented generation) - Weaviate realised the potential for vector databases to be used in combination with foundation models. AI models are inherently stateless (they have no memory). Excel databases, for example, are stateful (formulas are still there 2 days later). So, when it comes to memory, Weaviate are helping us move towards a world where foundation models go from from bees to dolphins. For those of you who prefer images when remembering concepts …

Why this matters - retrieval augmented generation can make foundation models up-to-date, reliable and a little more trusted (by cross-checking answers). The basic premise of making NLP models more intelligent is nothing new. In 2020 I worked on a 6 month GENBERT project to take this pre-trained model and train it on legal contracts. We had pretty good success in using it to help a Legaltech business build software that assists lawyers identify the minimum term of a contract. So technology and techniques mature but the premise is the same, how can we help humans save time with knowledge-intensive tasks?

So why exactly are Meta and even Sequoia so excited? Below I provide two reasons as to why this is exciting and different to the ‘traditional’ AI world that I used to work in.

Reason 1 - Better ROI on Data Science - When it comes to NLP, Meta say there has been a ‘marked change in approach’ in the last few years. This is eye-opening for every data science team on the planet. It used to take a team 6 months on an experimental and high-risk project. With foundation models you can get faster results, higher accuracy and will need a slightly different skillset to deliver the project. Less model retraining = less data science. More focus on up-to-date data = more data engineering. It is still transfer learning, just in a different guise.

Reason 2 - Increased value from existing data (broad application to numerous use cases) - At a time when Generative AI feels tactical and reactive, organisations should be thinking about what they can control now. Every business has a database which they often tell investors is their proprietary data (secret sauce). Every business wants to keep them up-to-date and optimised in the most efficient way possible.

So - what does this mean for the AI Strategy of my own organisation? Organisations should be thinking about how they turn their specific domain expertise into a valuable asset such as a vector database (see here for the future of databases vs vector databases), to provide themselves long-term strategic value in continuously exploiting future foundation models. They can’t control what OpenAI will launch and when, but they can continually invest in and learn what intelligence they need to build to exploit these models as they come to market. In the future all foundation models will need to maintain a long-term memory they can draw upon when executing complex tasks.

Give me some tangible examples I can tell my company about …

If you are starting to pipe data into a generative model, why not just store this back to the database?

Travel sector example - Imagine accommodation listings are stored in a database, they either have no description or are insufficient. Tell the foundation model, every time you spot a gap, generate a new one - and give it a vector representation. In a world of messy and incomplete data, this is massive!

The future - A vector database paired with a foundation model is doing something completely new. They are fully autonomous systems to interact with. The result could be better data, replacing data, deleting data. Also, imagine this as Multimodal. The output could be an image, audio, heat-map, etc. Product manager example request - ‘turn this product description into an image.’

3. Talk 3 - Prosus - Euro Beinat

Global Head AI and Data Science at Prosus Group and Naspers

Wins my award for - most ahead of the curve

Summary - undoubtedly one of the most impactful and impressive examples of applied Generative AI that I have come across. You can read more about it here and here. Prosus are the largest consumer internet company in Europe - presence in over 100 countries. They have incredibly strong AI foundations (network of 800 data scientists!).

Essentially they built and released a personal assistant in August 2022 for their employees - before ChatGPT existed! At the time many other organisations were starting to ban these types of tools.

Quick history lesson - In 2019 they started testing GPT-2, it was promising, they realised they had to invest further. in 2020 they tested GPT-3 at scale. They realised that the testers always has better ideas (they call this bottom-up innovation). In 2022 they launched it as a chatbot on Slack, designed for experimentation and powered by 20 models. As it stands today - under the hood they have dozens of proprietary models and foundation models working together.

Results:

10k users every day

48% engineering tasks (they tag interactions as they happen) such as coding

52% non-engineering such as writing and communication

Sales, support, finance - large groups of people - e.g. give me sales in last month given certain conditions. Using language to interrogate numbers. They are proving that we are living in an era where anyone, regardless of technical expertise, can liberate themselves from dashboards to find something out

Model feedback (UX) - Users can thumbs up, down, heart, pinocchio (hallucinate)

Pinocchios - 10% in Oct 2022, down to 3% June 2023'

Cost reduction - Since Oct 2022 has gone from 100 (baseline) to 45

Productivity impact (72% say higher, small percentage lower, 20% neutral).

Proven innovation model - Find it, pin it, scale it!

The future - there is so much more to come. Prosus believe they have only discovered 20% of what is possible.

Why this matters - this is incredibly tangible and exciting for any organisation or startup that needs inspiration and/or something more concrete to take to their business in an attempt to get going with Generative AI to solve internal problems for employees. It is a great example of the real-world application and value of the databases discussed in the Weaviate talk.

Every business has people. These people come and go. Domain expertise therefore flows in and out. This domain expertise can be captured as data - some good, some bad, some out of date. If this data can be augmented with the power of foundation models (general purpose AI) then employees can execute tasks faster and to a higher standard. Given that this technology is maturing rapidly, in 12-24 months’ time, organisations will find this to be a key source of competitive advantage. It feels obvious because we all waste so much time on knowledge management and finding the right tool/software to help us in our work.

Businesses therefore have a choice. Either invest in capabilities now to exploit this or buy the software. Innovation is hard and risky so many organisations will look to buy this software in. Therefore it is entirely logical that Prosus would look to monetise (pricing tailored to business needs) the problems they have already solved for 25+ organisations. Dawn Capital VC just announced a $700m fund for B2B Software startups. They claim that AI-powered analysis replacing business intelligence is one of the more viable ideas they have seen. My previous company monetises decision intelligence AI. These are all slightly more nuanced ways of providing an organisation with a brain. The AI doing the grunt work of analysing the data that flows through the business.

This idea of turbo-charging and/or outsourcing the skills that an organisation needs with Superminds, collective intelligence, or even swarm intelligence is very on-trend. Technologies that fall into the Generative AI bucket are cranking up the excitement level a few notches. I see new businesses like this almost on a daily basis.

Finding the killer use cases and specific problem to solve is never easy. This is called product/market fit. Prosus have identified this via their 10k internal users. Super smart.

4. Talks 4 & 5 - Artificial General Intelligence

Talk 4 - Connor Leahy, CEO of Conjecture

Wins my award for - most concerning, but also most eloquent

2019 was his ‘Oh Sh!t’ moment. Experimenting with GPT-2 he was struck by its potential. To use a golf analogy, both Connor and Tristan Harris are shouting fore. Both retain the optimism that the ball will not land on your head but Connor’s shriek will make you quit golf for good. He describes the current Generative AI situation as a ‘death race toward the precipice.’ He calls out the AI builders, who I unfairly described as poachers earlier, ‘most of the them are pretty good people but they justify their work based on the premise that the others building are worse than me so I have to build it.’ Fascinating. This will be remembered as a time of zero regulation, where many companies are building Segways capable of reaching 100mph but each is claiming to have the safest product. 100 million + consumers are riding around with no guardrails, big tech are delivering shareholder returns and global Governments are trying their best to work out how many should be allowed on the road and what the speed limit is.

Connor concludes with hope and optimism in identical fashion to Tristan, teeing up the UK AI Safety Summit on Novemner 1st. Conjecture are definitely a company to keep an eye on. Perhaps working there will be your Reykjavík moment?

Talk 5 - Stuart Russell

Wins my award for - most respected in the field

Professor of Computer Science at UC Berkeley

It isn’t easy to write about someone so renowned and ubiquitous - I will keep it brief. I have followed him closely since he gave a talk at my previous employer and I am a big fan of his 2019 book Human Compatible. Professor Russell is an optimist, a pragmatist and as a holder of multiple passports isn’t afraid to put pressure on big tech either side of the pond. People are very tuned-in to his understated assessment of what is going wrong and what can be done about it.

If we put Russell in charge of all the Segways I am pretty sure his stance would be aligned with how the Parisians are dealing with e-scooters. He is, after-all, a prominent figure within the 6 month pause movement. He is persistent in emphasising the positivies when it comes to what has been achieved over the last 6 months. He is in-awe of the power of GPT-4 but, when it comes to AI Safety, feels we have taken a backward step given it is imitating human linguistic behaviour. He is an advocate of the Homer Simpson red button (he just calls it a kill switch) and envisions a world where we design machines that must act in the best interests of humans and are explicitly uncertain about what those interests are. He believes this can be formulated mathematically as an assistance game.

I can’t wait to see how much progress he makes at Bletchley Park designing his kill switch.